Musk’s AI Sparks Controversy

Musk’s AI Depicts Labour Ministers as Nazis: A Concerning Trend

The recent depiction of Labour ministers as Nazis by Musk’s AI has sparked widespread controversy and debate. This incident has raised questions about the potential risks and consequences of relying on artificial intelligence. The Labour Party has expressed concern over the matter, citing the potential for such images to be used for malicious purposes.

The use of AI in creating and disseminating such content has significant implications for the UK’s political landscape. It highlights the need for greater regulation and oversight of AI development and deployment. Moreover, it underscores the importance of ensuring that AI systems are designed and trained to avoid perpetuating harmful biases and stereotypes.

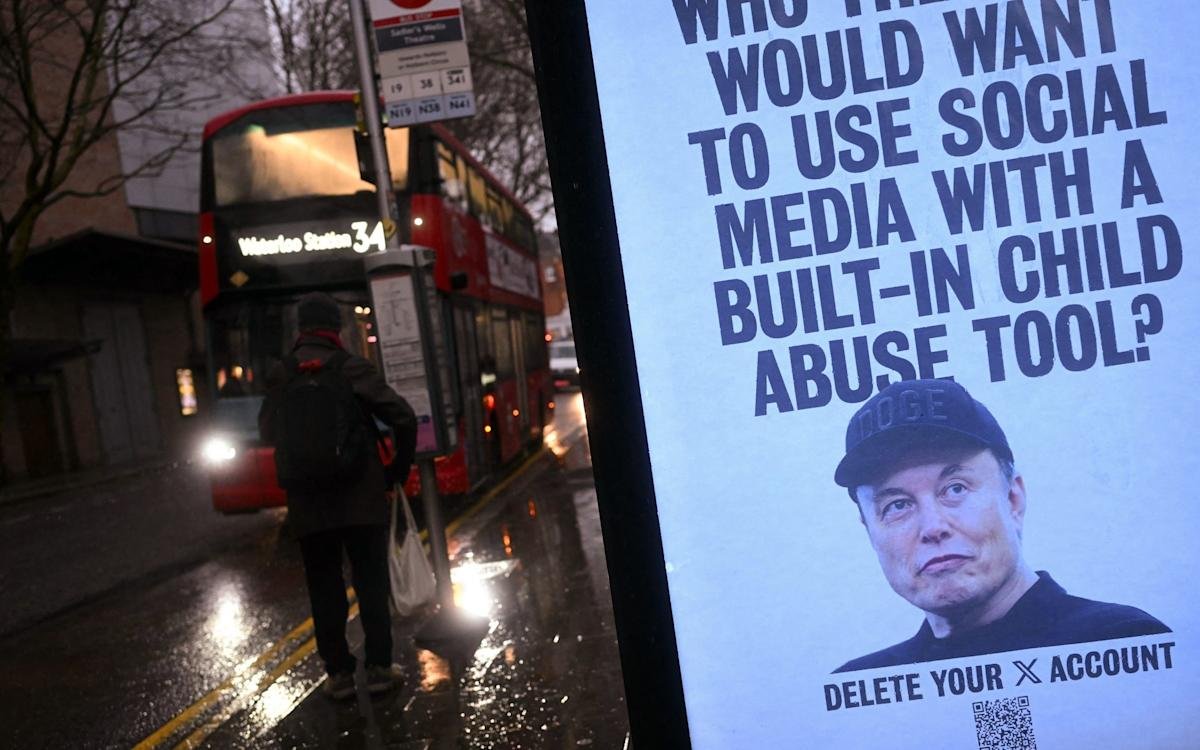

The incident has also sparked a broader discussion about the role of social media in shaping public opinion and behaviour. The spread of misinformation and disinformation through social media platforms has become a major concern in recent years. The UK government has been under pressure to take action to address this issue, including introducing new regulations and laws to govern social media companies.

The controversy surrounding Musk’s AI has also raised questions about the potential consequences of unchecked technological advancements. As AI becomes increasingly integrated into our daily lives, it is essential to consider the potential risks and consequences of relying on these systems. This includes the potential for AI to be used for malicious purposes, such as spreading hate speech or propaganda.

In the UK, there are already laws and regulations in place to govern the use of AI and social media. However, these laws are often struggled to keep pace with the rapid evolution of technology. The government has announced plans to introduce new regulations to govern the use of AI, including measures to prevent the spread of misinformation and disinformation.

The use of AI in creating and disseminating content has significant implications for the UK’s media landscape. It highlights the need for greater transparency and accountability in the creation and dissemination of online content. The public must be able to trust the information they receive, and companies must be held accountable for the content they create and disseminate.

The Labour Party has called for greater regulation of AI and social media companies, citing the need to protect the public from the potential risks associated with these technologies. The party has also emphasized the importance of ensuring that AI systems are designed and trained to avoid perpetuating harmful biases and stereotypes.

The controversy surrounding Musk’s AI has also sparked a broader discussion about the role of technology in shaping our society. As AI becomes increasingly integrated into our daily lives, it is essential to consider the potential consequences of relying on these systems. This includes the potential for AI to be used for malicious purposes, such as spreading hate speech or propaganda.

The UK government has announced plans to invest in AI research and development, citing the potential benefits of these technologies for the economy and society. However, this investment must be accompanied by a commitment to ensuring that AI systems are designed and trained to avoid perpetuating harmful biases and stereotypes.

The public must be able to trust the information they receive, and companies must be held accountable for the content they create and disseminate. The use of AI in creating and disseminating content has significant implications for the UK’s media landscape, and it is essential to consider the potential consequences of relying on these systems.

The Labour Party has emphasized the importance of ensuring that AI systems are designed and trained to avoid perpetuating harmful biases and stereotypes. The party has also called for greater transparency and accountability in the creation and dissemination of online content. The government must take action to address these concerns and ensure that the public is protected from the potential risks associated with AI and social media.